How to use Looker Studio with code-free, ready-to-go data automation for Amazon Sellers

Google announced that it is rebranding its cloud BI product, Data Studio, into Looker Studio. This change will allow companies to quickly build custom dashboards and reports without having to learn a new tool. In addition, Google says that the look and feel of the dashboard will remain unchanged.

What is Looker Studio?

Looker Studio is a free web-based reporting tool that allows you to create data visualizations, dashboards, and reports. It’s easy to use and can be accessed from any device with an internet connection. You can insert charts, tables, maps, and more into your sales or marketing dashboard. Once you have created your report, you can share it with others or publish it on the web so that all of your team members can see the latest changes.

Looker Studio is the perfect option for people that want to graduate from using Excel or Google Sheets without the additional cost of other business intelligence tools.

Looker and Amazon, Better Together

We are excited to announce Looker Studio, like Google Data Studio, can leverage our Amazon Seller Central (SP-API) partner connectors. The SP-API, formerly known as Amazon MWS, delivers a new type of seller tool that gives business and marketing teams new analytics capabilities.

Looker Studio paired with Amazon Seller Central data is a powerful combination for sellers who want to create a simple dashboard or in-depth comprehensive reports and visualizations.

As a data source, Amazon Seller Central offers a default dashboard that allows you to manage your products, inventory, and orders. The Seller dashboard offers up key metrics on units ordered, total sales, and others.

What if you want to do a different type of analysis, report, or visualization? While the default Seller Central UI is sufficient, leveraging your own reporting tools, like Looker Studio, allows you to undertake an in-depth analysis of organic sales, wasteful ad spending, and search history.

You can connect to data directly from Amazon Seller Central via API and use this data directly within Looker Studio. With this connection, you can create more comprehensive reports for yourself quickly and easily.

What Is Possible With Looker Studio + Seller Central?

For data-driven Amazon merchants, the Amazon Selling Partner API (SP-API) connector will supply key business Insights so you can measure the impact of retail operations. The SP-API connector allows direct, code-free access to daily or real-time Seller Central data through Looker Studio.

Openbridge offers a type of connector for various Seller services;

- Amazon Inventory: Get FBA Inventory Reports data for the listing, condition, disposition, and quantity to help with day-to-day inventory.

- Amazon Fulfillment: Get comprehensive, product-level detail on inbound shipments, shipped FBA orders, quantity, tracking, and shipping with FBA Fulfillment Reports and Inbound Fulfillment API.

- Amazon Orders: Order and item information for both FBA and seller-fulfilled orders including order status, fulfillment and sales channel information, and item details with Order API, and FBA Orders Reports.

- Amazon Finance: Balances, payouts, estimated and actual selling, storage, and fulfillment fee data with FBA Settlement Reports, FBA Fees, and Finance API

- Detail Sales & Traffic: Business-level Sales and Traffic reports offer performance metrics for product sales, revenue, units ordered, and page traffic metrics such as page views and buy box.

- Vendor Central: Manage retail business operations with automated integration so vendors can improve and maintain performance at scale while growing business.

- Retail Analytics: Vendor Retail Analytics delivers ordered revenue, glance views, conversion, replenishable out-of-stock, lost buy box, returns, replacements, and many more.

- Brand Analytics: Brand Analytics offers sellers and vendors market basket analysis, search terms, repeat purchases, alternate purchases, item comparisons, and much more.

Amazon Advertising + Looker Studio

Looker Studio is a powerful tool for business users and marketers to fuse retail sales data with Amazon Advertising data from the Amazon Demand-side Platform (Amazon DSP) and Amazon Attribution services.

Connect Shopify to Looker Studio

Connecting Looker Studio and Shopify is available with pre-built, ready-to-go connectors. See the Shopify connector for more details.

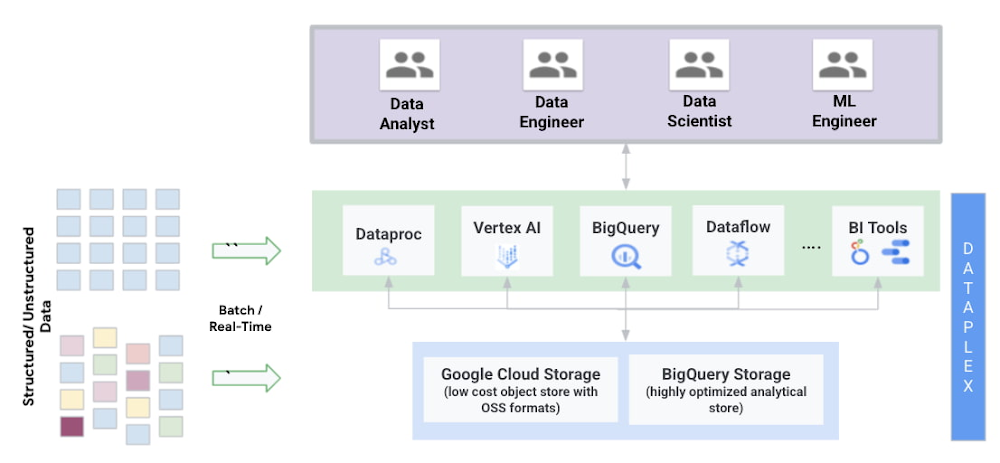

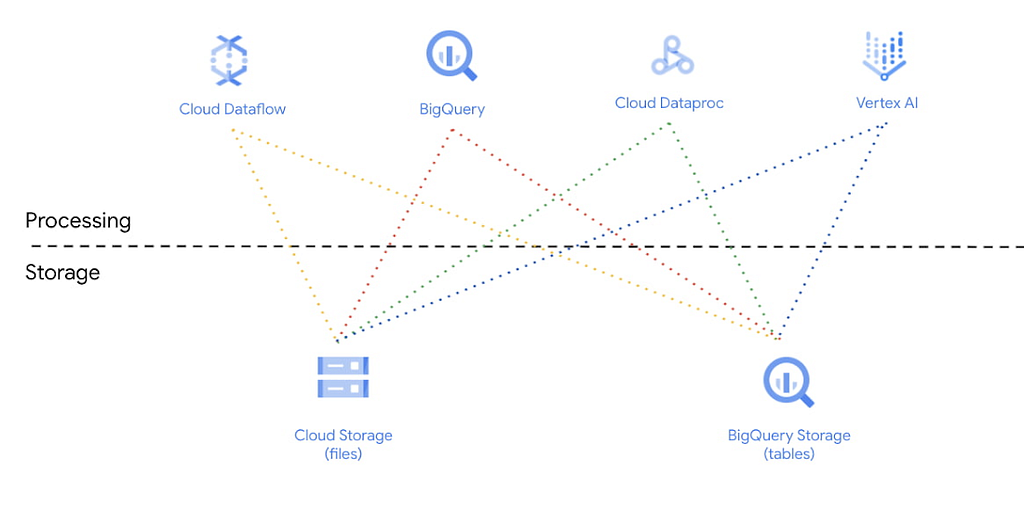

Looker Studio + Google BigQuery

Looker Studio works best when paired with a native connector to Google BigQuery.

If you don’t already have a Google Cloud account for this tool (and if not, I highly recommend signing up), doing so only takes a couple of minutes. Plus, we can offer an additional $200 in free credits!

The Google Cloud Platform Free Trial + additional credits from our partners provide you with hundreds of dollars to try the Cloud Platform. Whether you are building software or automobiles, looking to create the next big thing, or wanting to put your company on the same infrastructure that powers Google, Google Cloud Platform has the technology and the partners to help grow your business on our Cloud.

The process of connecting Looker Studio to BigQuery is simple and ready-built by Google. Now, you can use BigQuery and Looker Studio to create your own dashboards for Amazon Seller Central. This is a great way to make a dashboard in Looker Studio that is more flexible and powerful than the reporting UI that Amazon provides.

Looker Studio pricing

Looker Studio is free, though there is a Looker Studio Pro version that Google charges for. For more information on pricing for the Pro version, Google requires you to talk to a Cloud sales specialist.

Looker Studio Alternatives

Not interested in Looker Studio? Explore, analyze, and visualize data to deliver faster innovation while avoiding vendor lock-in using tools like Tableau, Microsoft Power BI, Looker, Amazon QuickSight, SAP, Alteryx, dbt, Azure Data Factory, Qlik Sense, and many others.

Get Started Now

Openbridge has developed the automated API connector code, which means there is nothing for your to program. We are official connector providers for Amazon Selling Partner and Amazon Advertising API. Openbridge is an Amazon Advertising Partner tools provider and is an approved PII data supplier for Seller Central.

Fuel financial forecasting, marketing analysis, sales reporting, and marketing optimization. Create a new custom dashboard, enhance an existing dashboard, or perform a breakdown of sales with Looker Studio.

Looker Studio + Amazon Seller Central was originally published in Openbridge on Medium, where people are continuing the conversation by highlighting and responding to this story.

from Openbridge - Medium https://ift.tt/zrDLMkf

via IFTTT